|

To get real value from data science, organisations must operationalise their data and productionise their AI models. This is according to Prinavan Pillay, Director at Teraflow.ai, a data engineering firm that specialises in these services, with offices in Johannesburg, Cape Town and London.

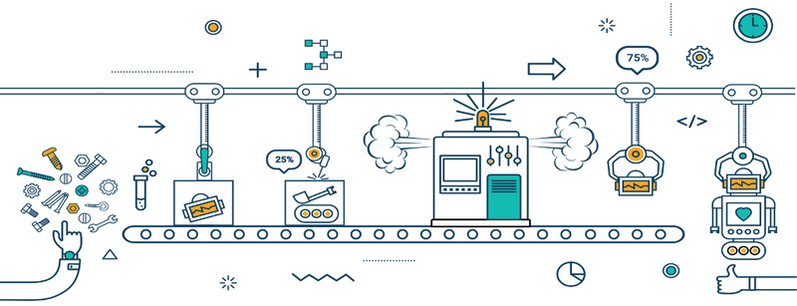

“Once these two key problems are solved, enterprises can expect to accelerate their data science projects to the point where they are seeing major innovations in their operations,” he says. But what exactly do the terms mean? Data science as a business function “Think of data science as a factory,” suggest Pillay. “Raw material - data - goes in, flows through a repeatable production process, and AI models come out, ready to add intelligent automation to a company’s operations.” Although each model accomplishes a different task, the way in which they are created can be standardised. However, like software, models must be constantly updated to achieve greater accuracy, correct flaws, and adapt to changes in data over time. How efficient this process is will determine the speed at which a company can innovate. Operationalising data A major problem with data science is that its raw material - data - does not come neatly packaged and ready for production. Instead, it tends to be spread across enterprise databases, document folders, spreadsheets, production systems, email servers, social media and more. It’s also siloed within specific business functions, limiting its innovation potential. Before data can be used, it must be collected, cleaned, transformed into a standard format, and stored in a central data store that is readily accessible to the data science team. Instead of refining this process over time, it should be designed and implemented in advance, freeing data scientists to focus on selecting algorithms and training models. “A repeatable, highly efficient system of processing data before it is needed is the essence of operationalising data,” says Pillay. Productionising AI models As an AI system is developed, its model is trained, which is a representation of statistical patterns it can use in the future to recognise similar data. A large number of applications exist that can produce a trained AI model, and each comes with its own output format. In addition, various programming languages used throughout a company may not be able to read these formats, restricting their use in corporate systems. It turns out that getting a model into production is quite tricky. This has resulted in the rise of several model exchange environments which allow trained models to be exported and deployed to highly scalable systems. Developers can then call and import these models in whatever format suits their needs, without having to know machine learning. “As with gathering data, the method of turning models into useful enterprise objects must be standardised and repeatable, and this is what is meant by productionising AI models,” says Pillay. The right approach Manufacturers wouldn’t start producing goods until they had acquired the correct raw materials, laid their factory out in a practical manner and developed a system for delivering their finished products. The same should be true of data science. “Organisations who address the twin concerns of operationalising data and productionising AI will gain a much greater return on their data science investment, and unleash innovation across their enterprise,” concludes Pillay. ENDS MEDIA CONTACT: Stephné du Toit, 084 587 9933, [email protected], www.atthatpoint.co.za For more information on Teraflow.ai please visit: Website: https://teraflow.ai/ LinkedIn: https://www.linkedin.com/company/teraflow/ Facebook: https://www.facebook.com/teraflowai-107896893957011/

0 Comments

Leave a Reply. |

Welcome to the Teraflow Newsroom. ArchivesCategories

All

|

RSS Feed

RSS Feed